In a series of articles for Native Instruments, author of PUSH TURN MOVE — Kim Bjørn — explores methods for interfacing with music, through hardware, software, or a combination of both. In this first installment, he covers technology and techniques for creating expression.

Musical expression is often defined as a performance with a very personal response. The human voice might be the ultimate example of this, able to mesmerize an audience with its highly expressive nature: fluid modulation of amplitude and pitch; an evocative timbre that’s intrinsically human and alive; and of course, the articulation of words, which themselves hold further meaning.

There’s a visual element to performance too. The connection between sound and its source is obvious: we can see where the sound originates from, and what effort it takes to make it. This isn’t limited to vocalists, of course. Experienced theremin players, for instance, similarly express themselves through ultra-precise microtonal notes; subtle vibrato by modulating pitch; and sudden sweeps and tremolo by manipulating volume. Carolina Eyck is one such thereminist — notice the dynamics in pitch, volume, and articulation in her performance.

Electronically created music is not always about a personal relationship. However, most producers would agree that creating expression is crucial for successful productions, whether they’re intended to move the dancefloor at a club, or move the audience at the cinema.

Now we’ll look closely at key aspects of expression, beginning with the fundamental parameters before moving on to technical goals of expression, and the technology required to achieve them.

“Playing a theremin combines audio and physical expression in a very distinct way.” – Dorit Chrysler, thereminist, composer and musician

Volume and Timbre

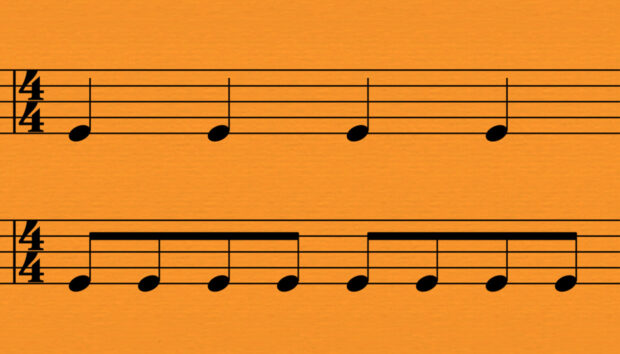

In contrast with the sparsely notated scores of the Baroque period, 19th Century composers started to mark intended expression and dynamics in more specific ways. Users of symphonic sample libraries are well accustomed to Italian words like Pianississimo (as soft as possible) or Fortississimo (very very loud). The dynamic markings of loudness range from ppp – pp – p – mp – mf – f – ff – fff, corresponding to a MIDI velocity level ranging from roughly 15-127.

Although electronic instruments and synthesizers offer many parameters for expressive manipulation, volume remains among the most important. When acoustic instruments are blown, bowed or struck harder, this creates a change not just in volume but also in timbre, and many electronic instruments recreate this by using MIDI velocity to modulate more than just amplitude levels. Less commonly understood is that MIDI CC 11 is dedicated to Expression, allowing musicians a further way to control playing ‘hardness’ (CC7, Volume, is related, but is intended only to control output level and not timbre — it’s more like the level fader on a mixer). Often, an expression pedal is used, which is quite different from a volume pedal, as explained in this video.

Of course, there’s more to expression in electronic instruments than just volume and velocity. Even as far back as 1928, the Ondes Martenot — one of the first popular and practical electronic instruments — offered very expressive controls for manipulating pitch, dynamics, timbre and articulation of notes. Several controls are used in combination, and the keys can move sideways, providing slight vibrato when wiggling them. The Ondomo is a modern Japanese-American recreation of this very instrument.

Technical goals of expression

In electronic instruments, we can distinguish between two overall technical reasons for using expression:

Emulation: This means mimicking the sound and playing style of an acoustic instrument, such as a guitar, saxophone, cello, or a symphony orchestra. This requires not only well-crafted software, but the hardware must offer sufficient and appropriate control over relevant parameters. Usually, that means expressive controls like keys, wheels, knobs, faders, touch-strips, etc, but could go as far as emulating the playing experience of the original instrument itself, as with a wind controller, MIDI guitar or the jog wheels on a DJ controller.

Variation: When working with synthesized sounds, a certain amount of “life” is desirable. To add interest or a sense of evolvement, techniques like layering different sounds, crossfading between layers, opening a filter, etc, become essential — especially in sustained sounds like pads, which don’t feature a characterful attack. In dance music, the most recognisable use of expression is probably the crescendo (or decrescendo) before the drop, obtained by filtering frequencies or modulating pitch.

The importance of control

Electronic instruments, as opposed to acoustic instruments, are rarely of an expressive nature. Instead, sound originates in the circuits or software, with physical or virtual controls allowing the artist to add expression by altering parameters. The more control options, the greater the capacity toward expressive results.

The legendary Yamaha CS-80 from 1976 is considered one of the most expressive analog synthesizers ever made, due in very large part to its responsive tactile controls. It features a long ribbon controller with the ability to begin bends at the first point of contact, and a keyboard with pressure sensors under each key for finely nuanced timbre control — here demonstrated by Vangelis, who used it on the first Blade Runner soundtrack. Having used the CS-80 for the soundtrack of Blade Runner 2049, composer Hans Zimmer spoke in an interview about the experience: “It responds to your touch; it translates your soul and your musicality the way a musical instrument is supposed to.”

Image: Perfect Circuit Audio

When soloing with synths and other electronic instruments, many of the expressive characteristics of acoustic instruments become desirable, such as manipulating the pitch more fluently than a set of “on-off” keys allow. The following iconic clip of George Duke using the “whang bar” on his Castlebar clavinet is a great example of how a single control can create a highly entertaining and expressive performance.

Expressive controls

There are myriad physical controls for drawing expression from electronic instruments. Here we explore some of the most common — and a few of the most esoteric.

Control Wheels

Since the Minimoog, left-hand controls have been the standard control accompaniment to the keyboard, with the usual suspects being the pitchbend and modulation wheels. Though you can (usually) map it as you please, many software instruments have the modulation wheel (MIDI CC1) pre-mapped to great effect. With SYMPHONY SERIES by Native Instruments, for example, the modulation wheel controls the large Dynamics knob that’s central to the interface of each instrument. This allows for altering loudness and timbre even while sustaining notes. Other typical uses for the modulation wheel include opening and closing filters, adding vibrato, or crossfading between sample layers.

“Electronic music is far from cold, faceless or devoid of emotion — in fact, it is truly quite the opposite, as far as I am concerned.” – Jean-Michel Jarre

Breath Controllers

If you’ve got two left feet, you might want to try a breath controller instead, meaning a device that allows the user to blow harder or softer to control parameters. Pioneered by Yamaha, their legendary DX7 synth offered this as an external control option (in addition to expression pedals). This compensated slightly for the lack of tweakable controls (beyond the pitch and mod wheels) on the front, which mostly consisted of flat membrane buttons supporting the design statement of a futuristic digital synthesizer of the 1980s.

Breath controllers aren’t as common nowadays, but that doesn’t diminish their potential for creative expression. In fact, a breath controller is an amazing tool for working with sample libraries and emulated instruments, or just to bring extra expression to your burning synth solos or evolving soundscapes.

In this video, Ramiro J. Gómez Massetti shows how the use of a breath controller and the Leap Motion motion controller can bring an impressive degree of expression to the KONTAKT instruments from Samplemodeling. These techniques can be explored with any software instrument with MIDI mappable parameters.

If you’d like to try one, the New-Type Breath controller developed in Japan is a good option — it allows the user to switch MIDI channels on the fly, and it can be mounted on a stand, as shown below:

Photo: Tatsuya Nishiwaki

Gesture Driven

For more dramatic impact, hand- or body-gesture-driven controllers can create a great visual connection to sound shaping. Roland developed the D-Beam infrared controller which first appeared on the MC-505 back in 1998, and since then numerous controllers have emerged utilizing the movement of hands in the air. Not quite in fashion yet, finger rings seem to be on the rise. The Wave ring senses three kinds of movement: sideways, up/down and rolling of the hand. Such a controller is ideal for shaping your sounds while playing or DJing — for example, mapping it to control effects in TRAKTOR frees up your fingers for other duties.

Sensors

Sensors are devices which detect or measure physical properties and output signals in response. Sensors can be used to add expressiveness or provide alternative input to alter or generate sound. One of the interesting new controllers is the Expressive E Touché, which is highly touch sensitive and senses motion and pressure in four directions. Combine it with a synthesizer like KONTOUR for extremely organic results.

Touch Strips

In designing an electronic instrument or controller, potential for expression should always be considered. For instance, the use of touch-strips on the MASCHINE JAM allows for expressive features like note strumming and performance effects control. Being able to use four controls with just one hand (or all eight with both) gives powerful potential for expression — knobs, for example, would not have permitted this number of simultaneous adjustments by hand.

Aftertouch and MPE

Originally a feature of the clavichord, and later the Mellotron, Yamaha introduced aftertouch in synthesizers with the CS-80. This feature allows the player to further influence the sound after its initial attack by applying pressure to the keys. The CS-80 featured individual aftertouch per note. This kind of individual aftertouch has been reintroduced in an even more expressive format: MPE (Multidimensional Polyphonic Expression). This MIDI standard requires compatible controllers — like the LinnStrument by Roger Linn, ROLI Seaboard, Haken Continuum, Madrona SoundPlane, or Eigenlabs Eigenharp — that often work with dedicated software. It’s possible to use MPE with REAKTOR by using User Library Blocks designed for the task — and if you have a Seaboard, check this guide.

The MPE standard allows the user to manipulate notes in a polyphonic passage — that is, notes within chords — much like a guitarist or violinist. Take a listen to the expressive capabilities of MPE in this fascinating performance where Marco Parisi plays “Purple Rain” on the Seaboard RISE at last year’s NAMM. If you want to try it for yourself, here is a great tutorial for playing KONTAKT guitar Libraries on the ROLI Seaboard RISE.

Multi-touch

Devices such as Apple’s iPad have great potential for expressive gestures. Apps like Jordan Rudess’ Geoshred leverage the fluent playing style allowed by the flat surface, providing myriad possibilities for sliding notes or seamlessly changing parameter values. Adding an iPad to your setup is a quick and easy way to bring more expressive control to your software instruments.

In this video, electronic music pioneer, Suzanne Ciani performs with a few multi-touch surfaces alongside her Buchla system: an iPad with the expressive Animoog app, another using the motion detection features to control noise, and the Buchla Multi-Dimensional Kinesthetic Input port.

3 expression workflows

Let’s look at the three slightly different ways we obtain expression in electronic music.

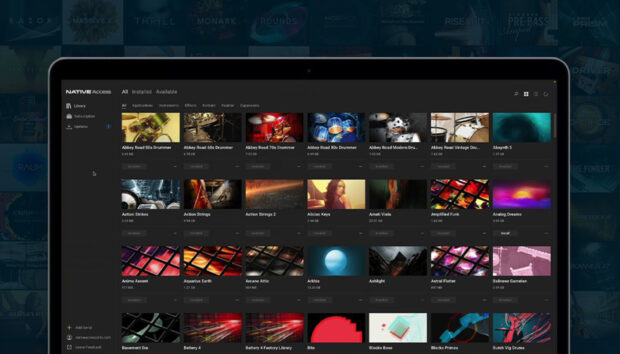

Performed. The first is in live situations, where you tweak physical controls to obtain a certain expression in real time. Having enough dedicated controls makes it easier. Using the eight knobs on the KOMPLETE KONTROL keyboard, you can immediately get hands-on with the ready-mapped parameters — or rapidly remap them with the KOMPLETE KONTROL software. Expand the expressivity of your setup with additional controllers like an iPad or expression pedal.

Created. The second is during production, where we use automation of instrument and effects parameters to add expression. In DAWs, automation typically uses editable lines and curves that determine value changes over time. Automation moves can also be recorded using the mouse or your control hardware — the Automation button on the KOMPLETE KONTROL keyboard is a fast and intuitive option.

Animated. The third method is a kind of in-between “fake” expressive playing, where automatic modulation — like triggered envelopes or LFOs — creates change in the sound. The many envelopes of MASSIVE and ABSYNTH come in handy here; synths like ROUNDS, KONTOUR, and FORM have dedicated features for animating parameters.

So, go ahead: dive into your hardware interfaces and software tools for fully exploring the potential for greater expressive results. Have fun expressing yourself!

PUSH TURN MOVE is a brand-new book on electronic music instruments and a must-have for every synth and design freak. It was rapidly funded in 2017 on Kickstarter and has reached thousands of readers in 55 countries. With a foreword by electronic music visionary Jean-Michel Jarre, the book celebrates the art and science of interface design in electronic music by exploring the functional, artistic, philosophical, and aesthetic worlds within the mysterious link between player and machine. Check the book out here.

KIM BJØRN is an electronic musician, composer, and designer with a profound interest in the interactions between people and machines. Based in Copenhagen, he gives regular talks and workshops within the creative field of interaction and design, has released six albums of ambient music and has performed live at venues and festivals around the world.

header image: Expressive E.