It’s been sixteen years that Sam Bird has been working on music and sound design for games. The Kentucky native moved to Los Angeles after attending Berklee College of Music in Boston, in search of a way to make a living exploring music and sound. After a chance run-in, Bird ended up at one of the biggest companies in the industry, Electronic Arts, where he would eventually come to hone his craft in the world of game audio.

Bird worked in various sound and music capacities at EA for seven years, developing sonic landscapes for series like Command and Conquer, Battlefield, and Medal of Honor, among others. He has since worked on sound design for the award-winning God of War (which recently took home a BAFTA award for best audio achievement), as well as titles ranging from the massive Starcraft 2 to the smaller-scale Skulls of the Shogun. He most recently wrapped up work on the upcoming game from Katamari Damacy creator Keita Takahashi, Wattam.

Bird’s current project harkens back to a formative cinematic experience from his youth, in the form of the upcoming Star Wars Jedi: Fallen Order. The project marks Bird’s return to the EA publishing stable, and is being developed by Respawn Entertainment, creators of the Titanfall series.

Bird spoke with us from his home in the Echo Park neighborhood of Los Angeles, about the small joys and infinite complexities of working in the ever-evolving field of interactive audio.

You mentioned that sci-fi is kinda your happy place as a sound designer. Why do you think that is, and what are your go-to tools for it?

I generally measure the quality of music by “how much Beethoven” is in it. By that, I mean a yearning or reaching for truth — a very powerful and diverse set of feelings and energies that are often contradictory but still manage to guide you through an interesting journey.

Sound design is not that much different than music, in that similar cadences of tension and release form the foundation of a great sound and/or moment. Understanding that rhythm and capturing it is really important to making an impact. The great thing about sound design is that it’s more abstract than music, generally, so it allows more freedom.

The number one reason why sci-fi is my happy place is that there are no rules in sci-fi — you can explore as much as you want, and if you’re lucky, create something that has never been heard before. Sci-fi can be anything — it can be magical, mechanical, organic, artificial…the only limit is your imagination, and making sure it matches the energy and context of why you’re making it. I love that so much, because so often through following the creation of a sound, you can end up with something that’s completely unexpected. Being open to that is hugely important and also a challenge, because you have to be okay with failing pretty badly for the sake of discovery. When the magic happens, though, it’s deeply satisfying.

I lean on Reaktor and Native’s stuff pretty heavily for sci-fi, especially if there’s a deadline — and, oddly enough, if I have a lot of time (which is rare, but allows for deeper dives with the software). Honestly, when things need to get done fast, a lot of the creation is starting with presets with Reaktor Instruments – Rounds, Razor, Form, Skanner XT, Polyplex, Skrewell, Carbon 2, and Lazerbass — and just playing and modulating while recording. After rendering them to audio, I then pull them over into post to mangle them further. Also, I love effects like Molekular for taking something and completely altering what it was, and the non-Reaktor effects plug-ins like RC24/48, and Transient Master.

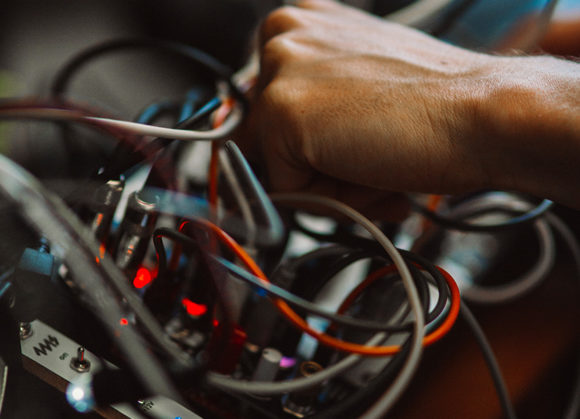

I also love my iPad, which is loaded with all kinds of pretty creative software synths. These often end up getting sent through my guitar pedalboard, before being brought back into a DAW; if needed, I pump them up a bit with my Neve 500 series pre-amps and EQs, Shadow Hills Dual Vandergraph, and Empirical Labs EL8X-S distressors.

I also use the OP-1, which needs no introduction. Oh, and I have a small Eurorack full of knobs to turn when I want half of the day (or more) to disappear and not know what happened.

Going out into the world and recording sounds à la Ben Burtt is, of course, usually another big part of the final product. Don’t forget to go outside, sometimes — it’s a remarkable place.

You mentioned a funny story about first coming into contact with Native.

I was a hair late to the game on Native Instruments. I was loosely aware, but the first time I ever really heard Reaktor was when Richard Devine performed at Recombinant Media Labs‘ studio in San Francisco during AES. It was an odd space, both because they had an enormous amount of discrete channels for the time — I think it was 12 surround, eight-subs, and two-to-four floor shakers — but also because you had to crawl into the live room through a kind of half-sized door.

Richard was set up with only his laptop in the center of the room, and he proceeded to rip apart time and space for the next two hours — it was brutal, and I loved it. At the start there were about 50 of us in the space, but by the end it was just me, a few friends, and a couple of other people, all lying on the floor getting pummeled by the floor shakers and subs. When he stopped, I remember he looked over at us and said, “You made it!” I had never heard anything like it in my life, and that was really exciting. We asked him what the main piece of software he was using for the performance was, and he said, “Reaktor.” I was working at EA at the time, and when I returned to work I immediately ordered Reaktor (and a bit later, Komplete).

How did you end up doing sound design? Was it music that led you there?

Music has always been the driving creative force for me. I have also always been very drawn to the production and character of a sound, especially in the context of music. I noticed very early on that bad production could involve very expensive gear and immensely talented musicians; and that, likewise, good production simply meant properly coloring the sound to fit its context, and could be achieved with cheap gear and even not-so-talented musicians. Good sound design lends itself to these parts of production. Really honing in on a specific sound to fit the space it occupies. (Of course, having expensive gear and talented musicians does make things easier — if it’s being driven by good taste and a clear vision).

So music has been the goal all along, and it’s just taken me awhile to get there.

How have you found your workflow or creative process has changed over the many years that you’ve been working in this space?

My creative workflow hasn’t changed much, honestly, but my technique has certainly improved, simply from doing it over and over. With music and sound, catching that spark is the trick. Once I get inspired, I lose all track of time and the next thing I know I have something pretty close to done, and I don’t exactly know how I got there. Some days that spark doesn’t come, and when I was younger I’d bang my head against the wall until it did. I think getting older, I’ve learned to recognize when banging your head against the wall is necessary — and when it is better to sleep on it, or put it away for a few days to come back to. That also has led me to a deeper appreciation of experience in general, and other people’s experience as well. When I was younger, I thought that the sheer force of will could unseat wisdom.

What was your working relationship like with Keita Takahashi on Wattam? Was that different from most music and sound design jobs you’ve had in games?

I am eternally grateful to my friend Brad Fotsch, who I originally met back at EA, and who brought me onto this project to help him finish the music and sound. He’d been composing and working on the music system and many early versions of the game for three years prior to me coming aboard — along with compositions from Keita’s wife, Asuka Sakai (who wrote the title theme and most of the jazz-based cutscene music). Working with Keita, Brad and the rest of the team on Wattam has been truly awesome and the experience has been unlike anything I’ve ever done in AAA games. Keita has a fantastic sense of humor and truly great ideas.

I’ve worked with and around a lot of game producers/creative directors over the years, and dealt with a lot of half-baked ideas. One could argue that the problem with Keita is that his ideas are great…meaning, we’ve got to ship this game, and he comes to you with this bizarre music request, and the pragmatic side of you says, “No, we can’t do this, we don’t have time.” But the creative side of you says, “Holy fuck, that’s a great idea and we’re totally going to do this, even though it means we’re gonna miss any deadlines we had, and potentially put the entire success of the project at risk.” With Keita, I’m happy to say, the second voice tends to win, which is why I’d almost be willing to march off the edge of a cliff with the guy at this point.

For Wattam, I did use Komplete a lot — more than I have for any project, in fact. Battery, Massive, Reaktor and really heavy on Kontakt. For the core tracks, I’d often layer in acoustic instruments, such as playing real drums over parts of the song, and melody and lead lines were performed by 20 or so live musicians. Due to the way the interactive music system works, if you put all the recorded music back-to-back, it’d be 40+ hours of music.

I would say Komplete is the most valuable source creation suite of software available and that mastering it — along with ProTools, Nuendo, Ableton or Reaper — is one of the best things you could do. It’s incredibly flexible, while sounding great right out of the box.

How did you end up doing music and sound for games?

I came to Los Angeles with a film scoring degree from Berklee, thinking loosely that I would work my way into that field (strangely enough, I was offered an entry-level position as an assistant to one of the composers at Remote Control many years later, but at that point I had just finished paying my dues via a brutal first few projects at EA, so doing that all over again was not in the cards).

When I got to L.A., there was no work. It was right after 9/11, and the economy had crashed. After nearly a year of barely making rent, I went to a Berklee alumni event where I met Steve Schnur, the head of worldwide music at EA. Even in school, it was apparent to me that the games industry would be a great one to bet on and that, since it was based in technology, it would adapt better than the music industry (which was collapsing at the time) and film industry.

So, I went up to Steve and said, “I do music and sound, how do I get into games?” He gave me the name of the head of HR at EA Los Angeles at the time; I called and gave her the reference from Steve, to which she said, “We don’t have anything in Audio, but we have an opening in QA (Testing Games).” I told her I was interested, went through a rigorous interview process, and eventually got the gig testing games for a whopping $12 an hour.

My first day on the job I found out where the audio people sat and introduced myself to the team, telling them I wanted to do audio. I would generally deal with audio-related bugs, and managed to befriend some of the level designers who helped me along quite a bit. I would do all my work for QA, then try to convince people on the audio team to let me do anything, working for free at that point. It could be argued that one bug in particular, however, got me the job.

The game wasn’t doing well, and they were asking QA for suggestions since we played the game constantly. I wrote up a lengthy suggestion about how in stealth missions, the environment’s audio should react to both player and enemy movement. Meaning, if you are in the jungle with a bunch of crickets chirping, enemy movement would silence them, giving the player a clue as to where their location was; similarly, the player’s movement could give a similar warning to the enemy.

The suggestion was met with laughter, and lots of it. They had just transitioned from one audio system to another — I was completely unaware of how any of that worked at the time — and they were just happy if sound was playing at that point, so script-driven interactive audio was a ridiculous idea. But after two months of being in QA, Erik Kraber, the audio director that eventually hired me full-time at EA, gave me a shot, and had me working on audio while still technically being part of QA.

It’s worth noting that ten years later, using the Frostbite engine on Medal of Honor: Warfighter, I finally set up a simplified version of the reactive “cricket” environment I had described in my suggestion.

You’re often working in-engine on many of these game projects. How long did it take to get comfortable working in Unity, Unreal, etc.? And how has this technical aspect of the work affected your approach?

When I started, the engines I worked with at EA were all home-grown. Also, each project often used a different engine, and there wasn’t a solid middleware like Wwise. Getting in the habit of constantly learning new software is part of the gig. It’s akin to having to learn a new DAW with every year-long project you were working on: the concepts are the same for the most part, but the names, menus and hot keys are all different. There wasn’t really a specific concept I remember struggling with, but learning the different systems certainly took time.

EA’s Frostbite was a major hurdle — if you notice, there’s a direct correlation between EA games failing, and their developer using Frostbite for the first time. The learning curve is crazy in that engine, but so is the payoff. It took about two years to really learn the audio system, but it really opened up the possibility of doing just about anything audio-wise, without any support from a programmer.

Wattam was my first real encounter with Unity, and they were using Wwise. Wwise is fantastic, and super intuitive if you come from a game audio background. We used it on God of War, and we’re using it on Star Wars with UE4.

If you want to get into AAA game audio, learn UE4 with Wwise. Both are free to download and will familiarize you with the key concepts. Wwise is better documented, IMHO, so good to start there. You’ll be overwhelmed at first, but don’t give up.

What’s it been like working for giants like Sony and EA? Are there major pipeline/workflow differences?

God of War was unique, in that it was the first game I ever worked on that was super collaborative within the sound department. Meaning, we would actually share sessions and build upon what other people on the team had done. Certain sets of sounds could pass through pretty much the entire core audio team, meaning that everyone had to be open to letting go of something that they might feel very strongly about. I think that is very challenging but very worthwhile — and almost always resulted in something that was better in the end. It also pulled the team together, since it was longer about individuals, but about what we were making together as a team. This was all audio lead Mike Niederquell’s doing, and I am grateful to him for creating that.

From my experience at EA, this type of collaboration is something that never happened. You owned the sound you were working on and brought to completion, as quickly as possible and moved on. Sony, in general, was very different than EA: Sony’s culture definitely focused on taking the time to get it right, even if it became expensive for the company. EA was more about moving quickly and doing the best you could, but essentially getting it out the door whether it hit the “great” mark or not. It’s definitely a valuable lesson to learn and certainly gets your chops up, and the teams that do it enough, without massive shifts in workforce or pipelines, can be masters at it and create great games.