Cookie notice

We use cookies and similar technologies to recognize your preferences, as well as to measure the effectiveness of campaigns and analyze traffic.

Got the basics down? Here's how to make ambient music that offers a little something extra.

We sit down with Josh Eustis to dissect the sounds and techniques behind Dreams Are Not Enough…

Discovering the creative powers of TRAKTOR for music production with Huerco S., Konx-om-Pax, and exael.

Learn about the collaborative project between Irish producer Eomac and the Japanese artist Kyoka.

PUSH TURN MOVE and PATCH & TWEAK author Kim Bjørn explores methods for interfacing with music through hardware and software.

Having scored countless advertisements, the UK producer breaks down his studio tools, workflow and tricks.

Electronic music pioneer discusses his love for REAKTOR BLOCKS, THRILL, and more.

Acclaimed sound designer Robert Dudzic divulges his secrets on recording, effects, and how to break into the industry.

Learn how to make your own track with Jacob Collier Audience Choir: a free choir vst plugin that brings expressive…

Explore free piano libraries for Kontakt that can give your music a vibrant, professional sound. From classical to contemporary, these…

Learn about the projects happening at Native Instruments in 2024 and our commitment to developing new and innovative products to…

Learn about the essential elements of film scoring, the process of scoring for visual media, and how to get started…

Discover basic DJ transitions that can keep the flow of your music. Learn how to move fluidly from one track…

Explore key features of drum sequencer plugins like pattern generation, randomization, and MIDI mapping to enhance your music production skills.

Become a better DJ by learning what beatmatching is, how to prepare your tracks and equipment to beatmatch, and how…

What is future house and how do you make it? Learn how to make future house music from inspiration to…

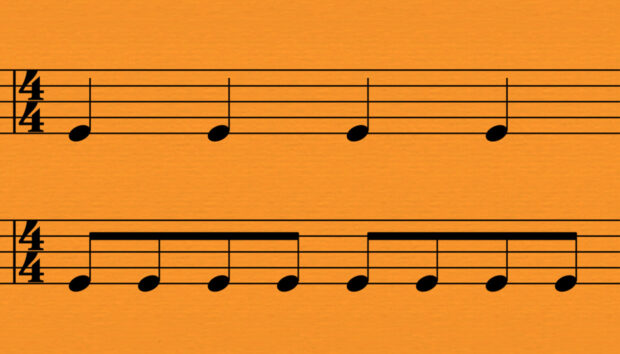

Unlock creativity with time signatures in music. Learn what time signatures are, common examples of popular time signatures, and how…

Learn how to add groove, realism, and complexity to your beats with this comprehensive tutorial on swing and humanization techniques…

Learn everything you need to know about synth pads, with a step-by-step guide to creating this crucial electronic sound to…

What is sub bass? Learn how to make sub bass and how to use sub bass effectively in your productions.

What are drum breaks? Learn the history of breakbeats and their importance in modern music. Then, learn how to recreate…