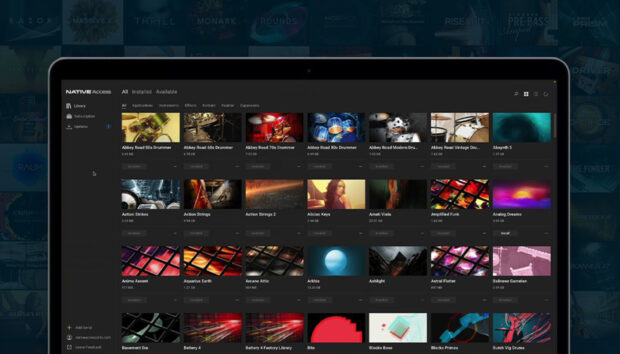

ARKHIS, the new KONTAKT instrument by Native Instruments and Orchestral Tools, might seem like a simple texture-generating plugin on the face of it. Look below the surface, however, and you’ll see a seriously powerful orchestral instrument driven by both exquisite recordings and creative signal processing.

“At Orchestral Tools we record in a very acoustic way,” says CEO and co-founder Hendrik Schwarzer. “We try to capture a sound that’s as close to the real thing as possible, whereas Native Instruments is coming at this more from a sound-design perspective. The hope was to build a bridge between those two worlds.”

With ARKHIS, you choose three orchestral sample layers to blend between with the mod wheel, and then apply your choice of effects through another controllable macro. If it sounds like a simple concept, that’s because it is; but a concept this simple can only work if the sound sources are right to begin with.

ARKHIS is full of dramatic strings, epic brass and haunting winds, but what are some of the more unusual instruments under the hood?

We’ve recorded some different tank drums which aren’t super common in the orchestral palette, and we have a kind of a plucked ensemble – not just guitars but also mandolins, dulcimers, a waterphone and some more unusual string instruments that we recorded in groups.

We also recorded six harps in a half circle. Normally you have a single harp in an orchestra, maybe two harps, but you never have six harps! We chose to go into a big room so we could play with the panorama and the room itself, so it sounds super-nice when you play one of these notes in ARKHIS. You can combine it with other sound sources like the strings, with the harp swelling in a little bit later.

The choir that we used was quite interesting, too. We have a small choir section and a larger one – the smaller one gives a bit more definition, the larger one has a more symphonic kind of sound.

Where does an Orchestral Tools recording usually take place?

We do most of our recordings at Teldex, which is a scoring stage in central Berlin. It’s quite a large space, comparable to Abbey Road [Studio One] – it’s more or less the same size. The acoustics are also very similar; it’s very balanced, quite high ceilings, and you can get more or less a 150-piece orchestra, including a choir, into it if you want.

For us it’s important, especially when we record orchestral instruments, that we record all the instruments in-place, with the violins on the left and the cellos on the right. Also the natural dynamics and relationships between each of the instruments have to be in perfect balance.

We also run [the orchestral recording service] Scoring Berlin, and right now we have a lot of sessions with Orchestras. We’re doing a lot of stuff for some big-name streaming services, because, due to the Coronavirus, the US scoring stages are currently closed. In Berlin right now, we’re allowed to do sessions with a distance of 1.5 meters between one another.

Do you go into a recording session with an exact plan, or do you sift through the recordings after to find the best parts?

It’s super hard to fix something after recording, so you need to have this idea of where you would like to end up with the sound before you start.

But it’s also really fun, because you have musicians who are used to playing with scores and doing things ‘exactly’ and ‘correctly,’ but because they’re recording a textural pad sound or different articulations, they get a lot more freedom. We might have a few musicians playing plucks for one long, sustained sound, and we control the sound by saying, “A little bit less, a little bit more, a little bit more crowded.” You get really different results; it’s a different way of working – like a synthesizer but with real musicians.

ARKHIS has lots of scope for tweaking the sounds, but are some of them already processed by you to an extent?

We also do a lot of sound design later on. We did things like pitching down samples and extending them, processing them with additional effects and reverb and whatever. That’s great, it’s an additional layer, but we tried to think from the beginning about the final product before we started the recording.

A lot of ‘FX’ are actually pre-recorded. For example, for the choir or for the strings, we told the musicians to detune, but just some of the players. So if you have a core section of 14 violins, then if just 40% of the string players detune their sound a little bit, then you get this kind of Doppler effect.

What have you learned about recording, sampling, editing and even distributing instruments since the beginning of Orchestral Tools?

For us the most important thing is always ‘the product’. There are three things that you need to care about, especially when you make sample libraries: it’s the room, it’s the musician and the instrument, and it’s the concept.

When it comes to the musician or the instrument, I also mean the performance itself. You really need to have an idea of how the articulations sound in a musical context. Sometimes it’s quite hard to tell, but just trying stuff out and doing test recordings helps. We do a lot of prototypes as well to see what works, what’s complicated, or what doesn’t work.

We also take some risks here and there with articulations. We just capture it, and sometimes at the end we see that maybe it wasn’t the way to go. All the samples are like snapshots of a performance, and even if it looks like a mechanical process to capture certain articulations, or certain single notes, it should sound like a true performance.

I’d say those three things are the most important, the room, the concept and the musician. If all those three things come together in a good way then the chance is quite high that you will end up with a good product.

Do you have any thoughts about MIDI 2.0 and the sorts of things it could let you do?

I think the advantage with MIDI 2.0 is that we get a unified way of working, especially with articulations. If you work with electronic music, you tend to think in patches or single sounds, but with orchestral music you need to think about things in terms of articulations: Is it a short note? A long note? A tremolo? The first layer is the instrument, and then you have this other layer of the playing technique.

With MIDI 2.0 they tried to make things more uniform, so you know that with this kind of MIDI signal, you get a tremolo, or with this kind of MIDI you get a staccato. To have this unified would help, because it would make things a lot more easier for the user to deal with sample libraries – it’s also easier for software developers to create tools that behave in the same way, within a single standard.

Is there any supporting technology that you’re itching to see built into MIDI or into DAW software?

For us in film composing it would be super-interesting if DAWs could look a few seconds ahead or so, so the sample player knows what’s in the arrangement. This would be interesting because then a sample player can look ahead and see there’s a very short note coming, and it will switch automatically to the staccato articulation so that you as the user don’t have to deal with all that stuff. I think this would be an interesting thing in the future, to have this kind of lookahead.

Learn more about ARKHIS and check out a special introductory offer here until August 3. You can also find more of Orchestral Tools’ pro-scoring essentials in our NKS partner shop.